Storage into MySQL Database Service(MDS)

using Data Integration Service – Part 2

In this tutorial, I will walk through about Importing .CSV file from Oracle Cloud Object Storage into MDS using OCI DI Service( Oracle Data Integration service)

There are multiple ways to import the backup/csv file from object storage to MySQL database service(MDS) , below are some of options you may try in case you have DBA background

[1.] Using Parallel Table Import Utility

https://dev.mysql.com/doc/mysql-shell/8.0/en/mysql-shell-utilities-parallel-table.html

[2.] Mount the Object Storage using S3fs-fuse S3 compatible API, and upload the files into MDS.

[3.] Using Oracle Cloud Service(Data Integration) Service. >> this blog is all about

When to use this approach - OCI Data Integration Service ?

Suppose you have large CSV file or else MySQL backup file or else you have database backup from multiple supported sources(RDS, MSSQL, PostgreSQL ,Aurora) and you wanted to import into MySQL database service(MDS) from object storage , then it’s easy to lift-shift the file into MySQL Database Service and run analytics and machine learning etc.

Supported data sources as below

https://docs.oracle.com/en-us/iaas/data-integration/using/data- assets.htm#data-sources

In case you wanted to know what is OCI Data Integration service (OCI DI) then below web link will have detailed info:-

What is Data Integration Service ?

Oracle Cloud Infrastructure (OCI) Data Integration is a fully managed,

serverless, native cloud service that helps you with common extract, load,

and transform (ETL) tasks.

These tasks include ingesting data from various sources, cleansing,

transforming, and reshaping that data, and then efficiently loading it to

target data sources on OCI

more info: https://www.oracle.com/integration/data-integration/

Let’s get started...

High level work flow to upload CSV file from object storage into MDS as below:-

-

Make sure Object storage has CSV file- acting as Source .

-

Make sure MDS has table with matching attribute as CSV file- acting

as Target

-

Make sure you have adequate policies defined to use data

integration service.

-

Create Data Integration task between source and target

-

Run the task and validate the output

Create Required Policies to use for data integration service

Design Data Integration Task for the Import

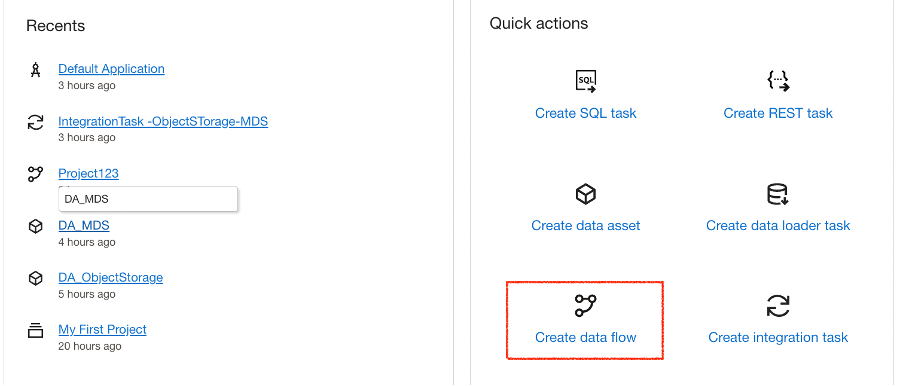

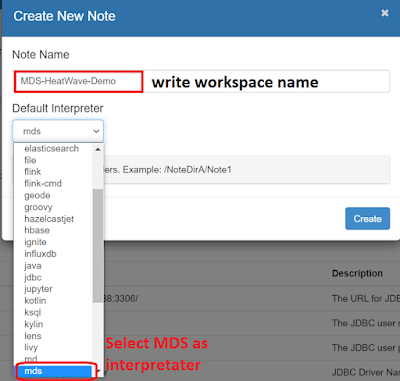

By going to :- Menu→Analytics & AI→Data Integration→Workspaces

Create data asset for Object storage

Create data asset for MDS

Create Data Flow

A data flow is a visual program representing the flow of data from source data assets, such as a database or flat file, to target data assets, such as a data lake or data warehouse.

Define Source details : CSV file details

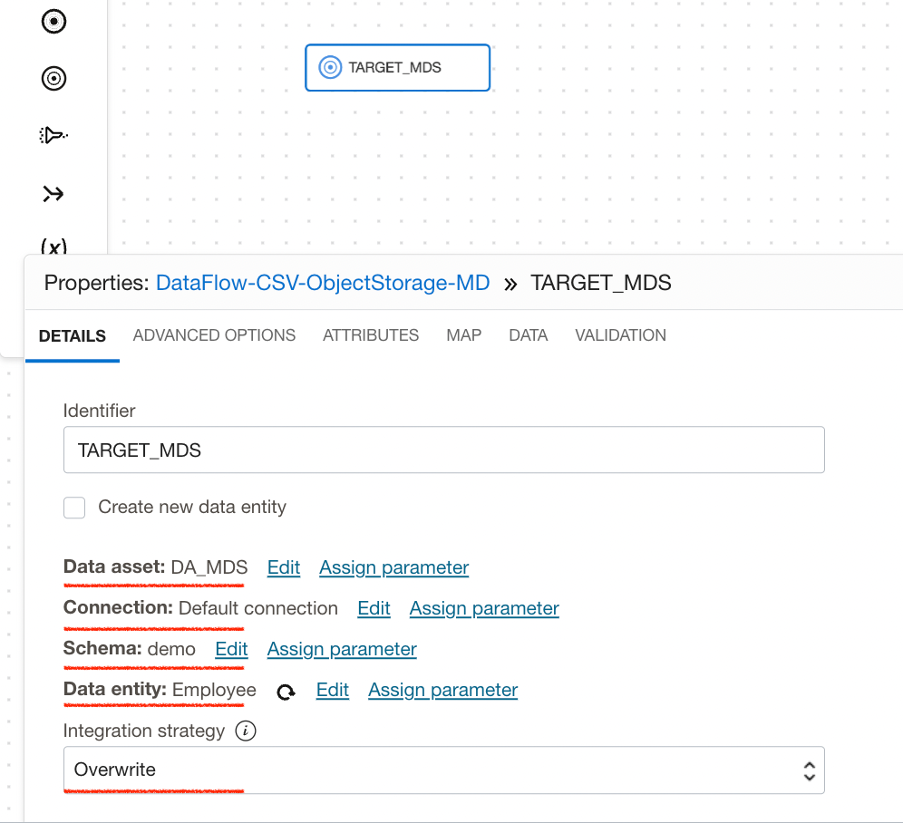

Define Target details : MDS details

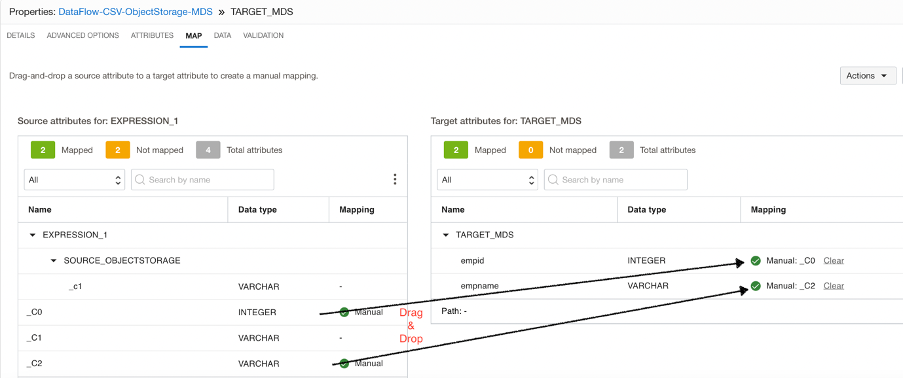

Make data flow between Source and Target

hange the data type form varchar to INT , in case data type is having mismatch between source and target , always ensure both side have same data type to work the ETL.

For that attach EXPRESSION to the source and convert the required

column data type if required or else let it be as it is , this step could be

optional.

Other option is instead of changing data type on the sources you get

imported data first and then due to the data type changing by “ALTER

TABLE ...” command

Click On Target : target_MDS

click on the Map tab on the Properties panel. Drag and drop the fields with NO Auto in the mapping column (from left to right). Do it until they are all mapped. You can expand / shrink the Properties canvas. Once mapping is done make sure you click on “Validation” , ensure for ZERO error.

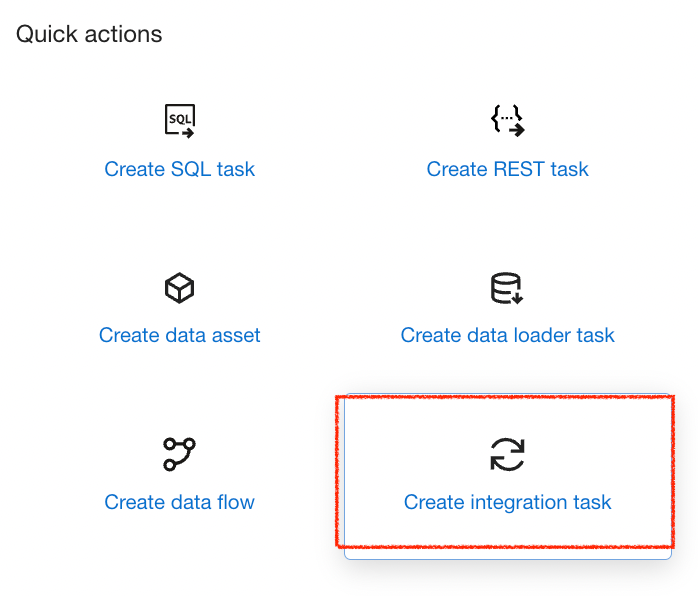

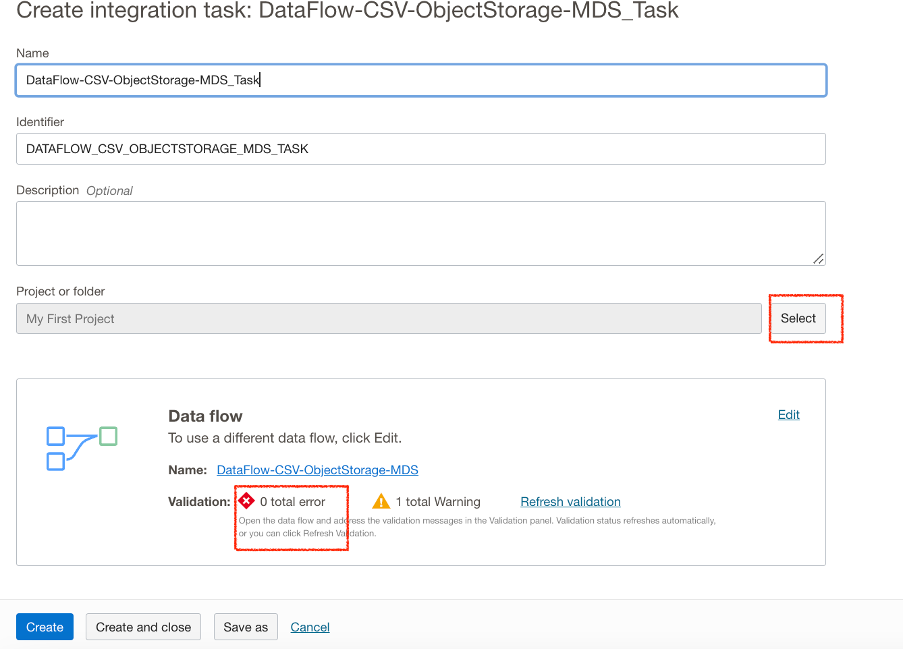

Create data integration Task

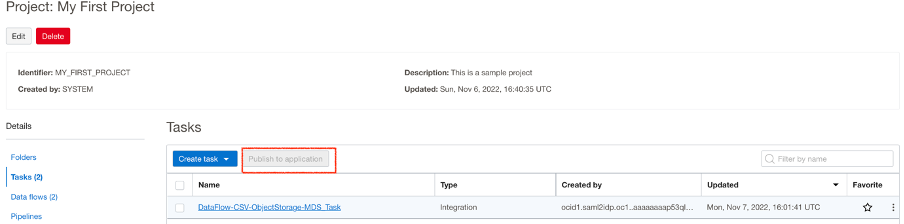

Publish the Integration Task

Go to Projects on the home screen. >> Click on My First Project>> click

on Tasks>> Publish to Application.

Run the Task

Go back to the Home screen and click Applications and Run

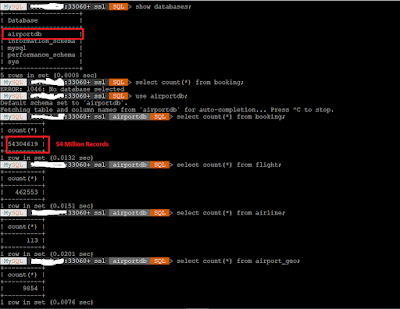

Validate the output

Login to MySQL Database Service and Validate the output

MySQL 10.0.x.x:33060+ ssl demo SQL > select * from Employee;

+-------+----------+

| empid | empname |

+-------+----------+

| 100 | chandan |

| 200 | chandani |

+-------+----------+

2 rows in set (0.0007 sec)

That’s it !!!

In this blog, you have seen that you can successfully setup an OCI Data Integration service in Oracle Cloud Infrastructure.

Utilising the steps, you can now create data assets, data flows to transform and move the data to desired targets.

It’s user friendly managed service which helps you to move off from different sources like AWS RDS for MySQL ,Aurora, Google cloud for MySQL ,MSSQL ,PostgreSQL into MySQL Database Service(MDS).

cc